Expert interview: Why Europe must not become a digital colony

Sebastian Dietrich

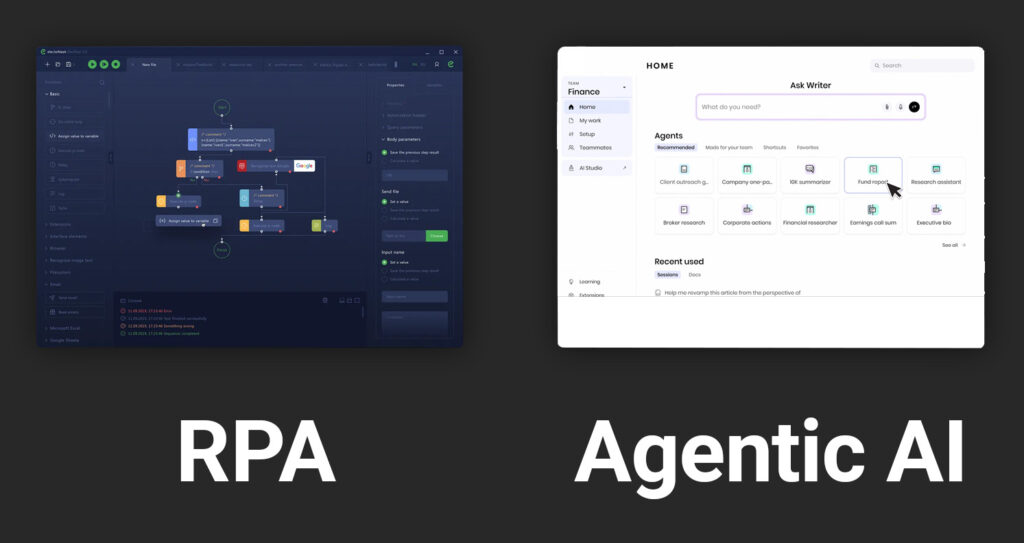

Hi Sharam, good to have you! Today we’ll be talking about some interesting developments in the world of RPA and, of course, AI, which will shock nobody. The first question I’d like to ask you is: In your opinion, how has Agentic AI changed, or perhaps even replaced, classic robotic process automation (RPA) in recent years, and how do you see this evolving in 2026?

Sharam Dadashnia

That’s a very interesting – and in 2026, entirely expected – question, and there are two key aspects to consider. The first is integration. Traditional RPA relied on software robots interacting with user interfaces, essentially mimicking human behaviour across existing systems. In a nutshell, bots automated processes by clicking and typing instead of humans. For many years, this served as a bridging technology.

“Classic RPA was a bridging technology — Agentic AI addresses the reality of knowledge work and decision-making.”

However, modern software architectures now provide APIs directly, making this UI-based automation largely obsolete. This shift is reflected in the market; the major RPA vendors have seen declining valuations and revenues over the past several years. Classic RPA is not only increasingly unnecessary but also inherently rigid. Rule-based automations struggle to adapt to changing user interfaces or dynamic environments.

The second aspect relates to knowledge work. In real business processes, decisions are rarely simple rule executions. Humans gather information from multiple sources, assess context, make judgements, and then communicate outcomes back to systems. This is precisely where Agentic AI adds significant value.

Agentic AI can support, or even fully automate the preparatory phase of decision-making by aggregating data, analysing documents, and presenting context-aware recommendations. Many companies currently favour a human-in-the-loop approach, where the AI prepares decisions and humans retain final responsibility. This is understandable, given that no business is ready to delegate accountability entirely to AI.

To give a concrete example from the insurance sector: case processing that previously took 20 to 30 minutes can be reduced to around five minutes when all relevant information is analysed and prepared in advance. Once organisations gain sufficient trust in these systems, full automation becomes a realistic next step.

Sebastian Dietrich

Completely understandable. However, you’ve mentioned trust several times, which is especially relevant for regulated industries. Many of these solutions rely on cloud technologies. With everything going on in the world between the EU, the East, and the West, how do you see trust evolving in this context, for example, with initiatives like the EU Sovereign Cloud?

Sharam Dadashnia

In practice, we see two dominant patterns. On the one hand, many AI services are still US-based. On the other hand, US hyperscalers now operate data centres within Europe, including Germany, enabling highly secure, isolated AI solutions that comply with EU sovereignty requirements.

The key point is that AI adoption is always use-case driven. Not every scenario requires maximum computational power from a service located outside the EU. In many cases, we have already implemented dedicated, protected AI systems, particularly for the public sector and other highly regulated environments, that run entirely within controlled data centres and are fully GDPR-compliant.

When working with public or non-sensitive data, the regulatory hurdles are naturally lower. Each project requires an individual assessment of data sensitivity, compliance needs, and technical requirements. Importantly, organisations today have real choice: European providers like Mistral, as well as open-source models, can be integrated and operated as managed services. We deliberately avoid exclusivity with any single provider.

Sebastian Dietrich

That’s good to hear since providing choice in times like this is crucial for a lot of companies hoping to implement new technologies. Let’s talk about AI models and regulations: With the EU AI Act setting new guidelines, do you see this as limiting innovation and automation, or as a necessary corrective step?

Sharam Dadashnia

This seems like a sensitive subject, but I don’t think it should be. From my perspective, the AI Act, coming fully into force this year, introduces a necessary framework. It forces organisations to actively assess whether an AI use case is highly critical, sensitive, or relatively low-risk.

“The EU AI Act doesn’t limit innovation; it creates clarity and trust.”

I see this as a positive development amidst this huge AI hype. It helps prevent careless handling of sensitive data, such as feeding confidential information into unsecured systems. The regulation doesn’t limit innovation; it adds transparency. Essentially, it acts as a labelling mechanism that helps organisations understand which services are appropriate for which use cases.

With this clarity, it becomes easier to design compliant solutions, something we are already doing successfully with our customers today. We don’t see the AI Act as an obstacle, but as an additional layer of assurance.

Sebastian Dietrich

Good to hear that, because it seems that a lot of the AI providers tend to look differently on EU AI Act. As a platform provider, how do we ensure technological sovereignty over business processes while integrating existing solutions and remaining future-proof?

Sharam Dadashnia

Our platform allows us to maintain full transparency and control at every step of a process. We can see exactly where data originates, how it is processed, and where it is sent.

If an organisation is using AI services outside the EU, such as certain US-based APIs which everyone already knows, we can identify this and make it visible. This enables active compliance monitoring and informed decision-making. In some cases, it may be perfectly acceptable; in others, it may require reassessment from a regulatory or risk perspective.

This transparency is crucial. It allows organisations to understand their current setup and make deliberate choices about sovereignty, compliance, and future architecture.

Sebastian Dietrich

That’s a nice way to put it but there is a growing need for even more technological advancement. We see growing interest in multi-agent systems and open-source approaches. Are companies actively waiting for a European open-source AI movement to mature?

Sharam Dadashnia

Many organisations are already experimenting with AI agents in isolated use cases. Broadly speaking, we have seen two approaches.

The first is a disruptive one, where companies fundamentally rethink entire divisions or processes with an AI-first mindset. That seems like a very bold approach. The second, far more common in German-speaking countries, is an evolutionary approach, where existing processes are gradually enhanced with AI.

While the strategic intent may already exist, large-scale implementation is still rare. Most organisations start small to build confidence and trust. Our approach is to identify process steps where the balance between effort and impact is optimal. These projects deliver quick wins, build trust in the technology, and create momentum for larger initiatives.

Start-ups often adopt AI-first strategies from day one. Established enterprises tend to move more cautiously, but steadily.

Sebastian Dietrich

There also seems to be a preference for starting with a proven vendor rather than going directly into open source.

Sharam Dadashnia

That largely depends on organisational maturity and internal capabilities. Building in-house expertise takes time, especially in a relatively new discipline where experienced talent is scarce.

Large enterprises often explore both paths, open source and enterprise solutions, in parallel. We ourselves leverage open-source models where appropriate, but we also recognise the complexity involved. Having worked with AI-driven process automation for over five years, we have already overcome many of the early challenges.

Choosing an experienced vendor can significantly reduce time, cost, and risk. Just as with any complex system, professional support becomes indispensable once scale, reliability, and compliance are required.

Sebastian Dietrich

Finally, do you have any closing thoughts on 2026 for the topics we’ve discussed today?

Sharam Dadashnia

For me, 2026 is a decisive year for AI adoption in German-speaking countries and across Europe. While public discourse often paints an overly optimistic picture, the reality is that many organisations are still in early stages.

At the same time, workloads are increasing and capacity is limited. Demographic change, especially the retirement of the baby-boomer generation, will lead to a significant loss of expertise. AI and process automation are essential tools to close this gap without compromising quality.

“Europe should not become a digital colony of the US or Asia. That is the mission we are pursuing, and the time to start is now.”

If Europe fails to act now, others will move faster. My goal is clear: we must remain competitive, independent, and confident in our own solutions. Europe should not become a digital colony of the US or Asia. That is the mission we are pursuing, and the time to start is now.